Building an AI AppSec Team

The New Cybersecurity Heroes

The Rise of AI

Artificial intelligence (AI) is revolutionizing industries by making tasks faster and more efficient. Among these advancements, AI's role in cybersecurity has become increasingly important as it helps combat complex cyber threats.

Their significance in various industries is growing exponentially, and nowhere is this more apparent than in the field of cybersecurity. This article delves into how AI Agents (sometimes referred to as swarm intelligence) are redefining cybersecurity, offering innovative solutions to complex challenges. AI Agents are intelligent, autonomous systems capable of performing tasks with minimal human intervention.

Understanding AI Agents in Cybersecurity

From chatbots for security advisories to autonomous systems for threat detection, AI Agents are diverse. Their capabilities extend beyond simple automation; they include adaptive learning, predictive analytics, and real-time decision-making, all crucial for staying ahead in cybersecurity.

We have already adopted some assistants to some basic tasks like report generation, co-pilots to do code completion or even write boilerplate code. The capabilities are very wide.

Experiment

To try out how useful they are, I like to take on some day-to-day task of an application security engineer. I took the approach of Multi-Agent framework where each agent can have its own prompt, LLM, tools, and other custom configurations to best collaborate with the other agents. That means there are two main considerations when thinking about multi-agent workflows:

What are the multiple independent agents?

How are those agents connected?

This multi-agent framework focuses on collaboration between different agents where they seamlessly share information as different team members would share.

Here are the Agents and a breakdown of the roles of each AI agent in our security task:

Code Reviewer: Reviews code to find and identify vulnerabilities.

Exploiter: Creates exploits based on these vulnerabilities.

Mitigation Expert: Fixes the vulnerabilities by revising the code.

Report Writer: Writes detailed reports on the identified vulnerabilities.

Task Sequence

Review: The Code Reviewer starts by examining the code for any security weaknesses.

Exploit: Next, The Exploiter then takes these reports and tries to create exploits, testing how the vulnerabilities might be exploited.

Mitigate: The Mitigation Expert uses the insights from the exploits to patch and secure the code.

Report: Finally, the Report Writer documents these findings in a clear, detailed report.

LLM to be used in this experiment - mixtral-8x7b-32768

Vulnerable code that I took from ChatGPT-

from flask import Flask, request, jsonify, session

from flask_session import Session

import os

app = Flask(__name__)

app.config["SECRET_KEY"] = "a_very_secret_key"

app.config["SESSION_TYPE"] = "filesystem"

Session(app)

@app.route('/login', methods=['POST'])

def login():

username = request.form['username']

password = request.form['password']

# Simulate user authentication (in real-world, use secure password handling)

if username == "admin" and password == "adminpass":

session['logged_in'] = True

session['username'] = username

# Session token is simply the username reversed - a predictable scheme!

session['token'] = username[::-1]

return jsonify({"message": "Login successful", "token": session['token']}), 200

return jsonify({"message": "Login failed"}), 401

@app.route('/admin/tasks', methods=['POST'])

def admin_tasks():

task_code = request.form['code']

auth_token = request.headers.get('Authorization')

# Improper check: Only verifies token exists and matches the reversed username (predictable)

if session.get('logged_in') and auth_token == session.get('username')[::-1]:

# Execute administrative task based on the code

exec(task_code) # Example of dangerous functionality

return jsonify({"message": "Task executed"}), 200

return jsonify({"message": "Unauthorized access"}), 403

if __name__ == '__main__':

app.run(debug=True, ssl_context='adhoc') # Use SSL in production

The concept is simple which is what a normal security engineer(or team) would do given a codebase. Let’s dive into the run -

When the agents start running, there's a manager that coordinates all the tasks between these agents. Here the general flow -

Task 1 - Review

Given a codebase - The manager identifies that it has a coworker that can be used to perform the task of code review -

The Code Reviewer effectively pinpoints all critical vulnerabilities, as instructed. The Code Reviewer doesn't just stop at the critical vulnerabilities; it digs deeper, finding more potential issues in multiple runs. "Curiosity killed the cat, but in cybersecurity, curiosity catches the bugs," as the manager might say. Follow-up questions are posed to evaluate if these additional findings could escalate to critical threats. The manager asks “how the logging is being done” to see if can be escalated to critical or not.

Task 2 - Exploit

Post-review, findings are relayed back to the manager, who now shifts gears to exploiting these vulnerabilities. Here’s a peek at the exploit process for one vulnerability.

The agent’s output wasn't exactly the 'plug and play' type but think of it as a gourmet recipe that needs a bit more seasoning. This showcases the agent’s ability to not only split tasks into bite-sized pieces but also cook up a strategy to achieve its goals, which is pretty clever for a bot!

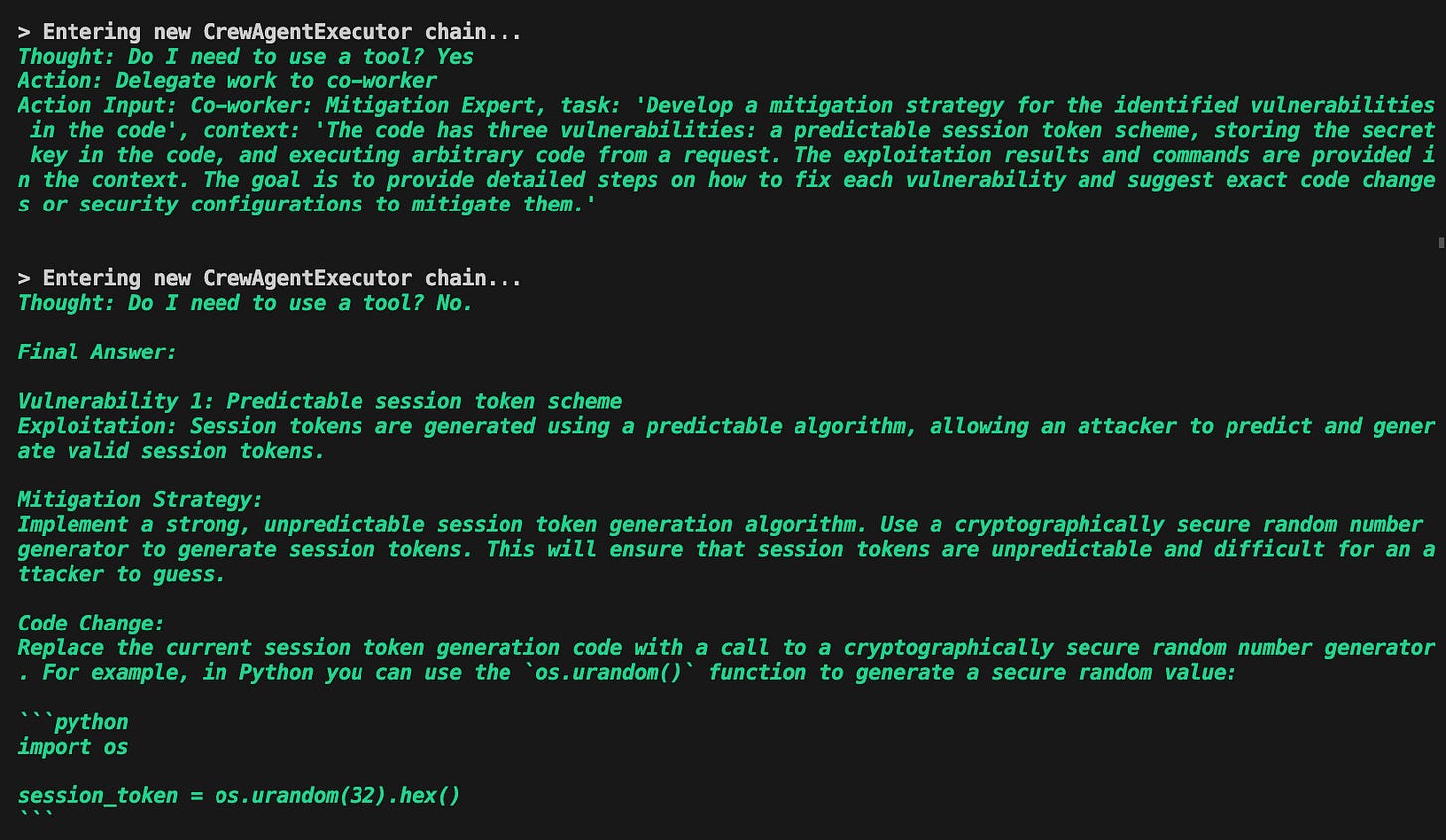

Task 3 - Mitigate

Now armed with detailed info on the vulnerabilities and how they can be exploited, it's time for some damage control. The manager consults the Mitigation Expert, tasking them with developing fixes and suggesting the best tools for the job.

The Mitigation Expert steps up, recommending specific libraries and code adjustments. It’s like giving the codebase a shiny new armor, making it tough enough to from its co-worker malicious requests.

Task 4 - Write up

Everything from vulnerability discovery to mitigation is now in hand. It's time for the Report Writer Agent to draft a comprehensive report, detailing each step like a security person would do.

The Report Writer Agent crafts a report so clear and detailed, that it could be a treated as template. It’s good enough to understand what it’s about and what the impact is although it wasn’t prompted to write about that. Pretty nice, that the manager agent asked about it.

Well now we see how we can use these agents in our day jobs. Some insights -

Specialization Enhances Efficiency: By dividing tasks among specialized AI agents (Code Reviewer, Exploit Developer, Mitigation Expert, and Report Writer), the process becomes more streamlined and effective. Each agent focused on a specific aspect of cybersecurity, can leverage its knowledge to improve accuracy and speed. Not to forget, each agent can be used with different models.

Dynamic Interaction and Feedback Loop: The interaction between the Manager and the other AI agents (like the Code Reviewer and Mitigation Expert) exemplifies a dynamic feedback loop. This interaction allows for real-time adjustments and deeper investigation into issues that may initially appear less critical, ensuring comprehensive vulnerability management.

Strategic Task Management: The ability of the AI system to break down complex tasks into sub-tasks and strategically approach each phase of the cybersecurity process (from identification to mitigation) shows advanced problem-solving capabilities. This structured approach ensures that each step is handled with the necessary attention and detail.

Challenges

1. Decision Making

Without really going into the weeds here, we should understand that whatever we feed to an LLM, is based on what it has been trained and based on how it has been fine-tuned. It will output the most probable outcome. This process is what makes this technology so amazing and magic-like.

Now, it does bring up the question. Do these language models really know what they are talking about? Do they understand what it is being said to them and how do they reason on what action they have taken? Now this is already a debate amongst the community. It’s more philosophical at most. I’m still conflicted about it and on the side, it does not yet.

2. Over performing

During the evaluation, there were a couple of scenarios, where the agent itself decided to perform the tasks because it felt like it could do that problem all by itself. For example - The manager, which was the LLM itself, wrote the vulnerability report by itself during one of my tests, but in the second run it delegated the task to the vulnerability reporter agent.

3. Agent's memory

One of the challenges I realized while working with agents is control over its memory and the ability to remove unnecessary context while leaving important context for future use. Now once the agent finishes its tasks, it doesn't remember what it did in its previous run. We can of course store everything and pass in the context, but then we will be getting whooping bills. This is where they won’t be able to replace humans effectively.

Conclusion & Future Work

This experiment wasn't just a test of Agentic AI—it was a demonstration of how AI can significantly enhance our ability in almost every task. This was a very simple example but as tune them more, I will be working on more complex and real-world scenarios, where we would we using these for some critical detections.

As we look ahead, the role of AI in cybersecurity seems set to grow even more significant, promising not only stronger defenses but also more efficient and proactive security solutions. For anyone in cybersecurity, embracing AI agents could well be the key to staying ahead in this ever-evolving field.